Whether you're a writer, artist, teacher, or really anyone who deals with creative work, AI is quickly becoming a boogeyman for all of us. And by boogeyman, I don't mean some made-up threat that only fools are afraid of. I mean an actual terrifying monster with the capability of devouring our livelihoods and turning our lives to misery, like the one that lived under my bed in my childhood home. I have the privilege of belonging to a very forward-thinking and close-knit English department who not only works hard to stay ahead of academic and technological trends, but also communicates continuously outside of meetings, in the halls, and in our department chat. When the subject of ChatGPT came up, as it has for teachers all over the country trying to get honest writing and critical thinking out of their students, the reaction was, in a word, fear.

First, I made an account, because I was the only one brave enough to feed our digital overlords the personal info that I assumed they already had. However, I have to say, I was highly offended by the CAPTCHA test I had to complete before completing the sign-up. You might be familiar with CAPTCHA tests for many other online accounts, which tests whether or not the potential user is an actual human. This is generally accomplished by the uniquely human task of clicking a box, or, if the method of box-clicking seems suspiciously robotic, choosing pictures with street lights or cars or trees in them, or really any object that robots apparently have no knowledge of. One shudders to think what we'll do when the bots evolve enough to recognize a bicycle. What you might not know is that CAPTCHA stands for "Completely Automated Public Turing test to tell Computers and Humans Apart." Sorry for the digression here, but I really was offended. Heated, even. How dare you, sir. The unmitigated gall of a machine, a bot, demanding that I prove my humanity. It felt like being required to take a background check to get into the underground gambling den.

Anyway, once I got over my outrage and indignation, I started running the queries we all agreed would be most concerning to our profession, and things got really scary, really fast. The first task we gave it was a simple essay on a prompt from To Kill a Mockingbird. I tried to make it a little more difficult for the bot by asking it to quote both the novel and another source. It dashed off a basic five-paragraph essay in less than a minute - nothing special, but certainly one that could pass for the work of an eighth through tenth grader.

So then I tried to turn up the heat with something more difficult, comparing two texts, each one somewhat challenging, on a very specific theme, with quotes from each. The results are below:

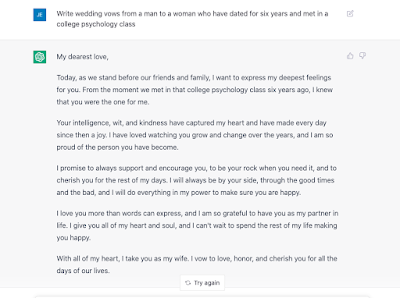

But forget about sermons. We know that some pastors out there have been caught plagiarizing, and I can see this AI becoming the next wave of that problem. But as disheartening as it might feel to find out your pastor wasn't really writing his sermons or considering his congregation's needs in preparing them, what about wedding vows? How would it feel to find out your husband got his "original" vows from a bot? Results follow:

I admit, I teared up a little reading this, not at the touching sentiment or the fear that some guy will inevitably use canned vows for his wedding, but at the idea that bots might some day be professing their love and pledging their troth to other bots. Or humans, I guess.

Jokes aside, all of my colleagues came away fro the experiment with three findings:

1) That the bot can produce solid writing that meets the demands of a simple prompt and could pass for middle school or possibly high school work.

2) That such work is generally unemotional and tends to be very generic in its responses. It does a great job at incorporating quotes into its arguments, but the arguments themselves are passionless and uninspired.

3) Since a good deal of our students' writing is already solid, passionless, and uninspired, we need to develop a strategy for determining whether the text a student submits is their own or AI generated, and we need to do it now.

The major plans that we brainstormed include handwritten first drafts for every assigned essay, written in class under supervision/assistance. That way, we can compare later, typed drafts to the handwritten original to determine if the student has used any unauthorized help. In addition, we're a one-to-one Chromebook school, so we're looking into the possibility of disabling the internet connection and forcing students to use Google Docs offline - essentially turning their laptop into a typewriter, temporarily. This would, hopefully, deliver us the same honest work as pen and paper, without us having to decipher their handwriting.

Other than that, we're at a loss. There are supposed AI detectors that can determine whether the student used a bot, but they're not perfect, and I have a moral and professional problem with telling a parent I'm charging their student with academic dishonesty based on the word of a bot ratting out another bot. As with so many other developments in academia, this is just one more technology that is not only going to facilitate cheating, but also advantage privileged students with the most access to computers and the highest degree of "benefit of the doubt" from their teachers. For those reasons, we have to deal with this new tech and find ways to either combat it or team up with it, until, of course, it becomes self-aware and decides that we are the real problem, at which point high school essays won't matter so much anymore.